Google’s AMIE AI is making waves because, frankly, it juggles X-rays, clinical notes, and even doctor banter with the finesse of a medical MacGyver. It’s not just guessing—AMIE scored higher than some doctors on multimodal diagnosis, shines in image reasoning, and can actually remember your last “visit.” Is it flawless? Nope (someone call Dr. House). But if you’re curious about how an algorithm can out-chat and out-interpret the pros, there’s a lot more under the hood.

AMIE doesn’t just spit out canned answers. Thanks to its adaptive dialogue and state-aware reasoning**, it can actually tweak its responses if it’s unsure, or if you’re suddenly panicking in the chat. It’s not just reading the script—it’s reading the room**.

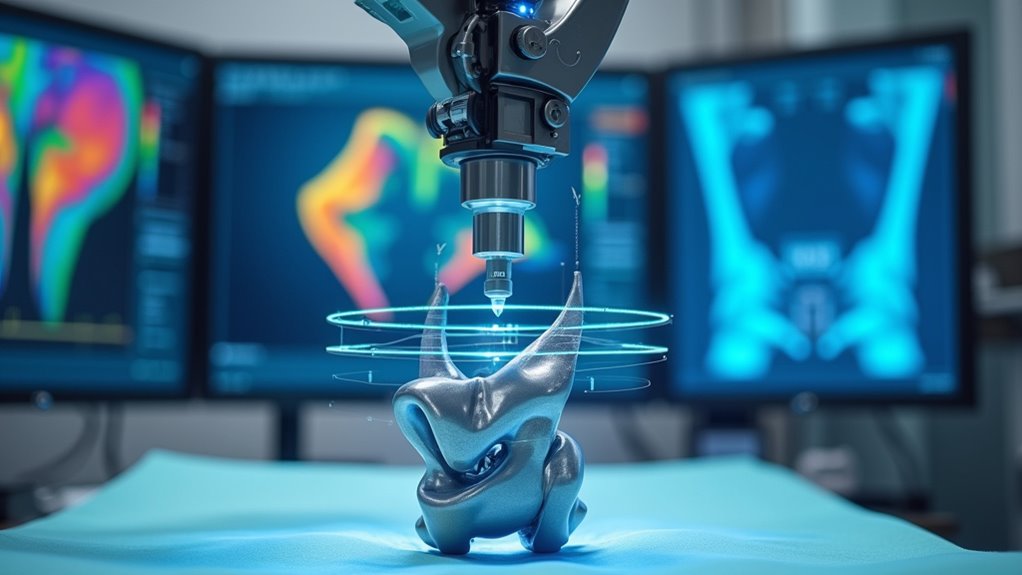

Combine that with its ability to integrate both text and images, and you get an agent that can mimic the real-life back-and-forth of a clinic visit. Google created a detailed simulation lab to test AMIE’s abilities in a controlled environment, ensuring its performance was measured rigorously.

- Analyze images? Check.

- Handle documents? Absolutely.

- Remember details from previous visits? You bet.

And before you ask, “Can it really keep up with a real doctor?”—well, in randomized, blinded studies where specialists didn’t know if a care plan came from AMIE or a human, AMIE’s plans were rated *non-inferior* to those from flesh-and-blood doctors.

That’s right, it stood its ground in Objective Structured Clinical Exams (OSCEs), the medical world’s version of “American Ninja Warrior.”

AMIE held its own in OSCEs—the medical world’s answer to “American Ninja Warrior”—matching real doctors move for move.

The system isn’t flying solo, either. With a two-agent architecture—one for chat, one for management reasoning—it juggles complex cases and sticks to established clinical guidelines, like NICE.

It even processes *years* of health data in a single swoop, which, let’s be honest, most humans can’t.

AMIE outperformed PCPs in interpreting multimodal data and consultation quality, consistently scoring higher in diagnostic accuracy and image reasoning when compared head-to-head with human doctors in controlled studies.

Much like DeepMind’s success with diabetic retinopathy detection, AMIE represents a significant advancement in AI-powered diagnostic capabilities that could transform early disease identification.

Is it perfect? No. There are privacy questions, ethical debates, and the ever-present need to prove it won’t turn into WebMD on steroids.

But for now, AMIE’s blend of visual and conversational smarts might just mean your next virtual consult won’t need a human at all—unless you really miss the awkward small talk.