Training an AI model starts with a chaotic hoard of data—think mountains of sloppy cat selfies or cryptic bank spreadsheets. This data gets scrubbed, labeled, and massaged into shape, like digital laundry day. Next, it’s fed to algorithms (yes, sometimes even neural networks, which sound fancier than they are), all while avoiding rookie mistakes like melting your laptop. The goal? Teach machines to spot the difference between a chihuahua and a muffin—seriously. Curious what magic happens next?

Of course, data doesn’t arrive in a tidy bundle. It’s messy. It comes in all sorts of formats: text files, pixelated images, fuzzy audio. Each needs its own TLC—think of it as a spa day for your datasets. You’ve got to store it somewhere safe too, preferably not on a thumb drive labeled “definitely_not_sensitive_info.zip.” Good data storage matters, and privacy is a must. Data gets anonymized and locked away like a Netflix password.

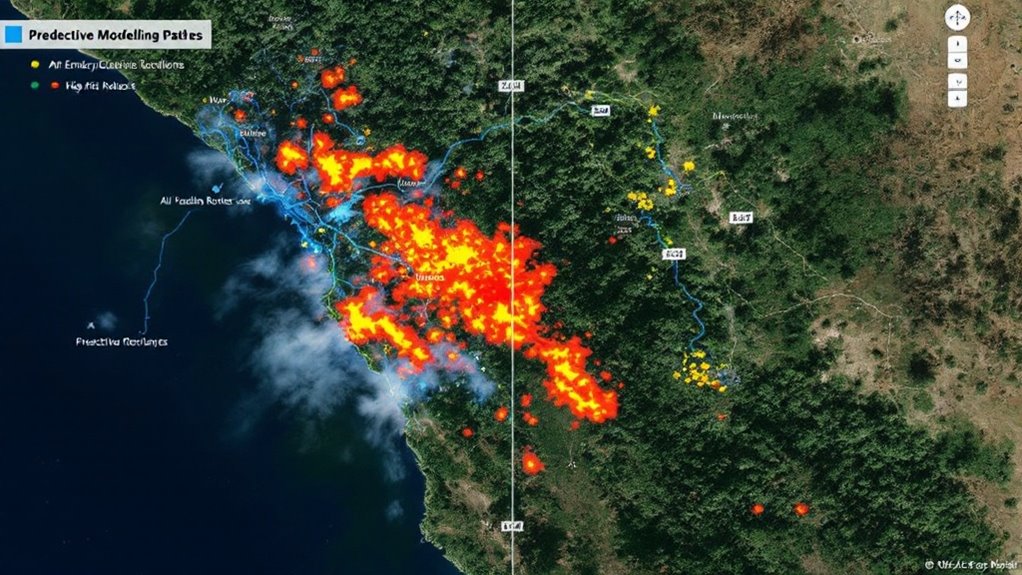

Now, the fun part: *preprocessing*. This is where you clean up the data—scrubbing duplicates, fixing typos, and normalizing everything so one weirdo feature doesn’t dominate the rest (looking at you, “age” column). Feature engineering techniques transform raw data into meaningful inputs that AI models can better understand. Sometimes, you even *create* new data through augmentation, like flipping an image or adding background noise to audio. And don’t forget labeling—because models need to know a hot dog isn’t a cat. The quality of data sources impacts the accuracy and performance of the model, so careful curation at this stage is crucial.

Preprocessing is the spa day for your data—scrub it, fix it, augment it, and label so your model knows a hot dog from a cat.

Addressing bias in data is a critical step at this stage, since unintentional patterns in your data can lead to unfair or inaccurate AI predictions.

Choosing a model is like picking a Marvel hero for your team: do you want something simple and interpretable, or flashy and complex? Decision trees, neural networks, random forests—it depends on your problem and the data. And yes, there are tools to help, because no one remembers all the options off the top of their head.

Training is next. Start basic, tune hyperparameters, validate performance, repeat. Manage your compute resources unless you want your laptop to double as a space heater.

In the end, whether you’re using supervised, unsupervised, or something in-between, it’s all about fine-tuning and evaluating—until your AI can finally tell a chihuahua from a blueberry muffin.